While learning Elixir, I've found that it's often-times similar feel to Ruby can be both a blessing and a curse. One the one hand, its semantic and eloquent style makes it feel familiar and manageable. On the other hand, being a part of the well-established Ruby community has spoiled me for support systems and tools. It's easy to forget that Elixir is still in its infancy and feel surprise when the community doesn't provide quite the level of support that Ruby does.

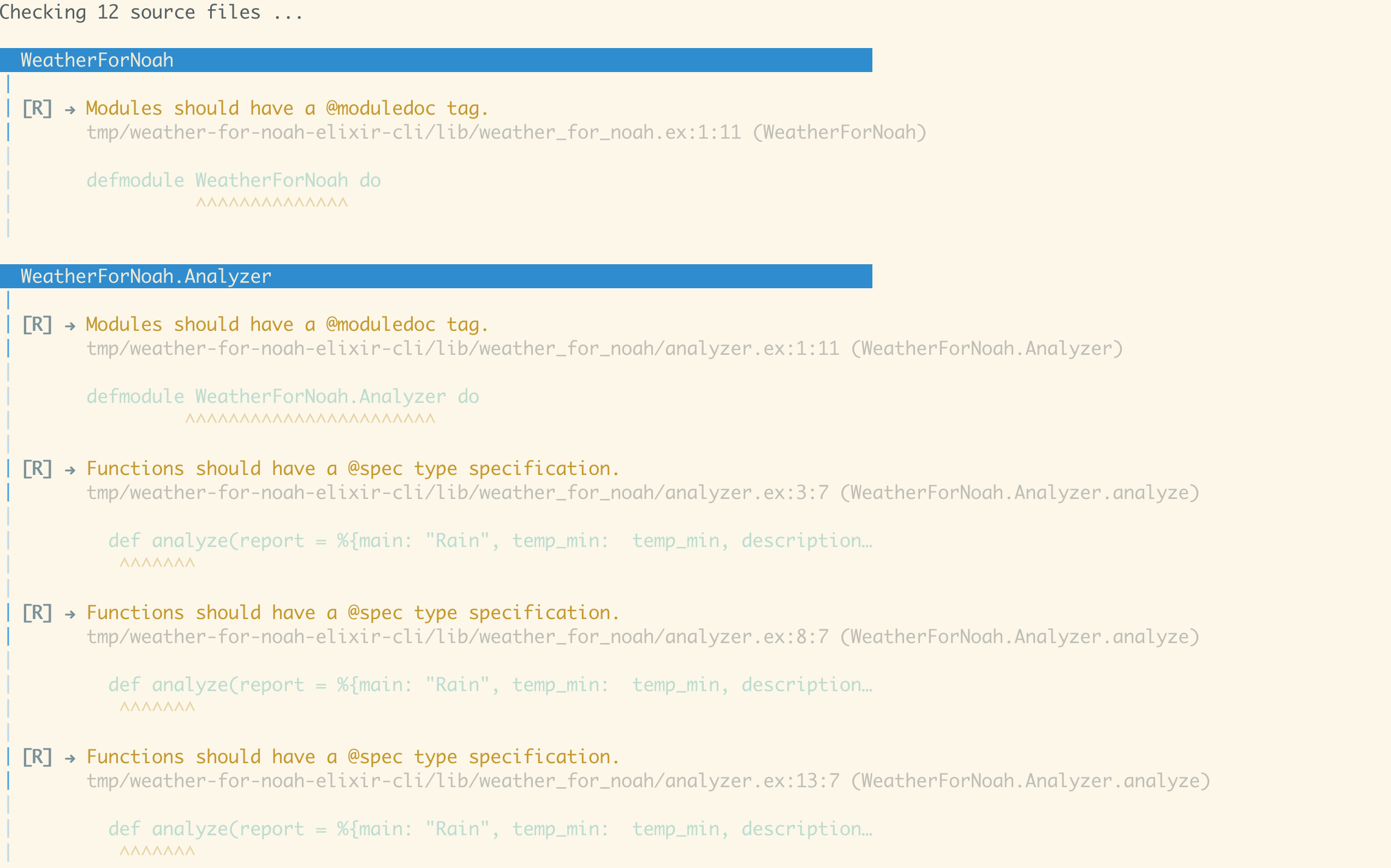

For example, as a Rubyist, I'm used to having my pick of code quality tools––Rubocop, Rubycritic and Flog are just a few that come to mind while I write this. Elixir doesn't yet provide such a wealth of options, but one code quality tool has proven to be well documented, well maintained and comprehensive. Credo can

show you refactoring opportunities in your code, complex and duplicated code fragments, warn you about common mistakes, show inconsistencies in your naming scheme and - if needed - help you enforce a desired coding style.

Credo is easy to use--simply add it to the dependencies of any Elixir project and run it from the command line like this:

$ mix credo

It will run through all of the files specified in your projects config/.credo.exs and check them on various code quality metrics. It will then print out a nicely formatted and prettily colored report marking the ares of your code that need improvement, explaining why those areas are not up to code (get it?) and suggesting changes.

Credo is a great tool, but I wanted a way to quickly and easily run it against a number of projects, without needing to go through the work of cloning down the repo, adding Credo as a dependency, creating a Credo config file for the project, and running it myself. I wanted a quick, easy and modular way to execute Credo against any project that could be run from the command line or be packaged into a web application.

Enter Elixir Linter, a OTP application for running Credo against any GitHub repo from your command line. In this post, we'll walk through the build of this application step-by-step, paying particular attention to how OTP supervisors are leveraged to design a fast and fault-tolerant engine for running Credo.

In my next post, we'll take our OTP app and make it command line executable with the help of a CLI module and the Mix Escripts task. Then, stay tuned for a later post on incorporating our OTP app in a Phoenix application so we can run our tool on the web.

Let's get started!

OTP Architecture

Program Requirements

Before we start writing any code, let's lay out the architecture of our OTP application. We'll begin by breaking down the jobs that our app will have to be responsible for.

- Given a repo name, our app will need to clone it down.

- Given a cloned-down repo, our app will need to run Credo against that repo and collect the results.

- Once the repo has been linted for code quality, our app will need to output those results to the command line.

- Also once the repo has been linted for code quality, the application will need to remove the cloned repo from its cloned location.

Now that we understand the basic responsibilities of our application, let's identify some of the opportunities for the program to fail. This will help us to design our supervisor tree.

- The application could fail to clone down the repo.

- The application could fail to properly lint the repo.

- The application could fail to properly output the results of the lint.

- The application could fail to remove the cloned repo.

Our supervisor tree should account for these failure points by having a supervisor dispatch each discreet task. That way, if any task fails, its parent will be able to spin it back up and try again.

The Supervisor Tree

We will start up our OTP app by starting our top-level supervisor with an argument of the name of the repo want to clone and lint.

We will have a top-level supervisor that runs two children: a worker in charge of storing the name of the repo we are trying to clone (our "repo name store") and a sub-supervisor.

This sub-supervisor will know the PID of the repo name store, and pass it to a child worker that does the heavy lifting of cloning and linting the repo. We'll call this our "server".

Our server will in turn supervise the main tasks of:

- Cloning the repo to a temp folder

- Linting the repo

- Outputting the results

- Removing the cloned repo from the temp folder

Ready to lint some code? This cat is:

Generating an OTP Application

Mix makes it easy to generate the skeleton for a basic OTP application.

$ mix new elixir_linter --sup

This generates a project with the following structure:

└── elixir_linter

├── README.md

├── config

│ └── config.exs

├── lib

│ └── elixir_linter.ex

├── mix.exs

└── test

├── elixir_linter_test.exs

└── test_helper.exs

The lib/elixir_linter.ex file is the entry point of our app. The call to ElixirLinter.start will start up our entire supervisor tree. So, this is the function in which we should start our top-level supervisor by calling on our top-level supervisor module. We'll keep it simple:

defmodule ElixirLinter do

use Application

def start(_type, repo_name) do

{:ok, _pid} =

ElixirLinter.Supervisor.start_link([repo_name])

end

end

We'll begin with our Supervisor module.

Building the Top-Level Supervisor

We'll define our top-level supervisor in a new subdirectory, lib/elixir_linter/.

# lib/elixir_linter/supervisor.ex

defmodule ElixirLinter.Supervisor do

use Supervisor

def start_link(repo) do

# start children

end

def init(_) do

# start supervising and institute supervision strategy

end

end

First, we pull in the Supervisor module with the help of the use Supervisor line.

Then, in order to conform to and leverage that module, we define two functions: #start_link and #init

We call the #start_link function to start up our supervisor, which will in turn invoke the #init callback function.

# lib/elixir_linter/supervisor.ex

...

def start_link(repo) do

result = {:ok, sup} = Supervisor.start_link(__MODULE__, [repo])

start_workers(sup, repo)

result

end

def start_workers(sup, repo) do

{:ok, store} = Supervisor.start_child(sup, worker(ElixirLinter.Store, [repo]))

Supervisor.start_child(sup, supervisor(ElixirLinter.SubSupervisor, [store]))

end

def init(_) do

supervise [], strategy: :one_for_one

end

Let's break this down.

-

First, our

start_linkfunction is designed to take an an argument of GitHub repository, which will eventually be passed in from the command line in the format:owner/repo_name. -

start_linkcalls onSupervisor.start_link, passing it the name of the module, and the repo name as an argument. This starts our supervisor running. -

Then we call on a helper function,

start_workers, to start the children of our supervisor tree. One of our children is a worker process, the running of ourElixirLinter.Store(coming soon!), in which we will store the repo name. It get's started up like this:

Supervisor.start_child(sup, worker(ElixirLinter.Store, [repo]))

To the Supervisor.start_child call, we pass in our supervisor process PID, sup, and the worker it will supervise--the worker running ElixirLinter.Store. This will start the ElixirLinter.Store module running via ElixirLinter.Store.start_link(repo). We'll come back to that when we're ready to build the store.

Our start-up of the store worker returns a tuple with {:ok, store}, in which store represents the PID of the worker running the ElixirLinter.Store module.

Our second child process is itself a supervisor. When we build out that sub-supervisor in a bit, we'll teach it to run our server module, which will in turn run several tasks, as laid out in our earlier diagram. It get's started up by this line:

Supervisor.start_child(sup, supervisor(ElixirLinter.SubSupervisor, [store]))

Here, we pass Supervisor.start_link our supervisor process PID, as sup, and the instruction supervisor(ElixirLinter.SubSupervisor, [store], which starts up a supervisor worker running our ElixirLinter.SubSupervisor module. That module will be started up automatically via its own #start_link function, with an argument of the store PID.

Now that our top-level supervisor knows how to get started up, and in turn start the worker running the store module and the sub-supervisor that will run the server module, we're ready to build out the next level of our supervisor tree.

The ElixirLinter.Store Module

Why Do we Need a Store? Fault Tolerance!

The purpose of this module is to act as a central repository for the name of the repo passed in to our supervisor tree when it starts up. We want to isolate the repo name and store it here in the second level of our supervisor tree so that the sub-supervisor child process can always retrieve the repo name should any of it's children fail and need to be restarted. If a failure occurs that causes the server module running our repo-cloning code to die, for example, the sub-supervisor will be able to start it back up and retrieve the repo name from the store module.

Now that we know why we need it, let's build it!

Using Agents to Maintain State

Our store module has just one responsibility––hold on to the repo name that get's passed in to our top-level supervisor.

We'll use Elixir's Agent module to store repo name is state.

# lib/elixir_linter/store.ex

defmodule ElixirLinter.Store do

def start_link(repo) do

Agent.start_link(fn -> %{repo_name: repo} end, name: __MODULE__)

end

end

When the store module is started up via start_link, it starts an agent running.

Agent.start_link takes in a first argument of a function, and the return value of that function becomes the state of the module.

We know that our store module will need to respond with the repo name when asked by the sub-supervisor, so we'll build out a get_repo method that does just that.

# lib/elixir_linter/store.ex

defmodule ElixirLinter.Store do

def start_link(repo) do

Agent.start_link(fn -> %{repo_name: repo} end, name: __MODULE__)

end

def get_repo(pid) do

Agent.get(pid, fn dict -> dict[:repo_name] end)

end

end

Our get_repo function wraps a call to Agent.get, passing in the PID of the process whose state we want to query. Agent.get takes in a second argument of a function, which will be automatically passed an argument of the current state of the process whose pid is the first argument. Since the state of our store module process is the map returned by the function we passed to Agent.start_link, we can use the dict[] function to retrieve the repo name we stored earlier.

Now we're ready to build out the sup-supervisor process that will take in the store module process's PID and use it to retrieve the repo name, clone down the repo and lint it for code quality.

The Sub-Supervisor Module

The sub-supervisor itself is fairly simple, it's job is to start up the main work horse of our application, the server module, and supervise it.

Recall that the top-level supervisor starts up the store worker and captures its PID to pass to the start-up of the sub-supervisor:

# lib/elixir_linter/server.ex

{:ok, store} = Supervisor.start_child(sup, worker(ElixirLinter.Store, [repo]))

Supervisor.start_child(sup, supervisor(ElixirLinter.SubSupervisor, [store]))

This will automatically call ElixirLinter.SubSupervisor.start_link(store). So let's build out the sub-supervisor's start_link function to take in the store process's PID.

defmodule ElixirLinter.SubSupervisor do

use Supervisor

def start_link(store_pid) do

{:ok, _pid} = Supervisor.start_link(__MODULE__, store_pid)

end

def init(store_pid) do

child_processes = [worker(ElixirLinter.Server, [store_pid])]

supervise child_processes, strategy: :one_for_one

end

end

Our start_link function starts up the supervisor, passing in an argument of the PID of the store worker.

Calling Supervisor.start_link, inside our own ElixirLinter.SubSupervisor module invokes our init function, which starts a worker running our server module, and tells our sub-supervisor to supervise it.

Now that we see how and when our ElixirLinter.Server module gets started with an argument of the store worker's PID, let's build out our work horse module, ElixirLinter.Server.

The Work-Horse: ElixirLinter.Server

Before we start writing code, let's remind ourselves what our server module has to do for us.

It needs to:

- Clone down the given repo to the

tmp/ - Lint that repo for code quality (with the help of Credo)

- Output the results to the command line

- Remove the cloned down repo from the

tmp/directory

Of course it would be madness to have just one module handle all of these responsibilities, so we will rely on a number of helper modules. But our server module will be responsible for spinning up these helpers and supervising them as needed.

First things first, our start_link function:

# lib/elixir_linter/server.ex

defmodule ElixirLinter.Server do

def start_link(store_pid) do

repo_name = List.first(ElixirLinter.Store.get_repo(store_pid))

{:ok, task_supervisor_pid} = Task.Supervisor.start_link()

Agent.start_link(fn -> %{repo_name: repo_name, task_supervisor: task_supervisor_pid} end, name: __MODULE__)

end

We're doing a few things here:

- Retrieve the repo name from the worker running the

ElixirLinter.Storemodule. - Start another supervisor process, this one will be used to supervise the cloning and linting tasks.

- Store the repo name and the task supervisor process PID in this module's state, with the help of

Agent.start_link.

Next up, we'll want to invoke functions that run those tasks. Our goal is for the following code to work:

# lib/elixir_linter/server.ex

defmodule ElixirLinter.Server do

def start_link(store_pid) do

repo_name = List.first(ElixirLinter.Store.get_repo(store_pid))

{:ok, task_supervisor_pid} = Task.Supervisor.start_link()

Agent.start_link(fn -> %{repo_name: repo_name, task_supervisor: task_supervisor_pid} end, name: __MODULE__)

fetch_repo

|> lint_repo

|> process_lint

end

Let's build out those functions now, then we'll build the modules that support them.

# lib/elixir_linter/server.ex

...

def fetch_repo do

get_task_supervisor

|> Task.Supervisor.async(fn ->

get_repo

|> ElixirLinter.RepoFetcher.fetch

end)

|> Task.await

end

def get_repo do

Agent.get(__MODULE__, fn dict -> dict[:repo_name] end)

end

def get_task_supervisor do

Agent.get(__MODULE__, fn dict -> dict[:task_supervisor] end)

end

Let's break down our fetch_repo function.

- First, is uses a helper function,

get_task_supervisorto retrieve the task supervisor PID from state. - Then, it tells the task supervisor to supervise the execution of an anonymous function that does two things:

- Fetch the repo name from state

- Pass that repo name to the execution of our

ElixirLinter.RepoFetch.fetchfunction. This is the module where we'll build the "cloning down the repo" functionality. More on this later.

- Lastly, we pass the return of calling

Task.Supervisor.async, aTaskstruct, toTask.await. which will return to us the result of the anonymous function that our task supervisor executes. This will, in effect, return the return ofElixirLinter.RepoFetcher.fetch. We'll code this function later on to return the destination to which we've cloned the repo, i.e. the filepath to the cloned directory. In this way, we will be able to pass that filepath to our linting function so Credo knows which files to lint.

It's important to understand that we used Task.async and Task.await to wait on the execution of our asynchronous repo-cloning code and return the result of that code's execution.

This return value get's piped in to our lint_repo function.

Let's take a look at that function now.

...

def lint_repo(filepath) do

get_task_supervisor

|> Task.Supervisor.async(fn ->

ElixirLinter.Linter.lint(filepath)

end)

|> Task.await

end

lint_repo behaves similarly to fetch_repo. It retrieves the task supervisor PID from state and uses it to spin up and supervise the execution of our linter module's lint method. We'll build out that module in a bit.

This function to uses Task.await to wait for and capture the return of executing our linting code so that it can be piped into our next function, the process_lint function, which will be responsible for outputting the results to the command line. So, when we build out our ElixirLinter.Linter.lint function, we'll need to make sure it returns the results of the code quality checks.

Lastly, our process_lint function:

...

def process_lint(results) do

repo_name = get_repo

worker = get_task_supervisor

|> Task.Supervisor.async(fn ->

ElixirLinter.RepoFetcher.clean_up(repo_name)

end)

ElixirLinter.Cli.print_to_command_line(results)

Task.await(worker)

end

This function will take in the results of the code quality checks run by Credo, and pass them to the ElixirLinter.Cli module to be output to the terminal. We'll also build out this module shortly.

This function is also responsible for running some code to "clean up" after ourselves, i.e. remove the cloned repo from the tmp/ directory into which we originally cloned it.

This functionality is once again managed by our task supervisor process.

Now that we have our server module spec-ed out, we'll build our helper modules.

Cloning the Repo

The ElixirLinter.RepoFetcher module is responsible for cloning down the given repo, as well as removing it after the code quality lint is complete.

We'll clone down our repo with the help of the Porcelain Elixir library, which will allow us to execute shell commands from inside our Elixir program. Think of it as the equivalent of Ruby's system method.

First things first, we'll need to add Porcelain to our application's dependencies and make sure our app starts up the Porcelain application with it starts itself up.

# mix.exs

defmodule ElixirLinter.Mixfile do

use Mix.Project

def project do

[app: :elixir_linter,

version: "0.1.0",

elixir: "~> 1.3",

build_embedded: Mix.env == :prod,

start_permanent: Mix.env == :prod,

deps: deps()]

end

def application do

[applications: [:logger, :porcelain]]

end

defp deps do

[

{:porcelain, "~> 2.0"}

]

end

Then we'll run mix deps.get to install our new dependency.

Now we're ready to build out our RepoFetcher module.

Our main function is fetch_repo. It needs to clone down the repo into a tmp/ directory, which we'll create as an empty directory in the root of our project.

# lib/elixir_linter/repo_fetcher.ex

defmodule ElixirLinter.RepoFetcher do

@dir "tmp"

def fetch(repo) do

repo

|> get_repo_name

|> clone_repo_to_tmp(repo)

end

def get_repo_name(repo) do

String.split(repo, "/")

|> List.last

end

def clone_repo_to_tmp(repo_name, repo) do

Porcelain.shell("git clone https://#{Application.get_env(:elixir_linter, :github_oauth_token)}:x-oauth-basic@github.com/#{repo} #{@dir}/#{repo_name}")

"#{@dir}/#{repo_name}"

end

Notice that we've set a module attribute to store the name of the directory to which we are cloning repos: tmp.

The fetch_repo method pipes the full name of the repo, owner/repo_name into the get_repo_name function. This function grabs just the name of the repo, minus the owner's name, so that we are able to return the final destination of the repo, its cloned location in tmp/.

fetch_repo calls on a helper function, clone_repo_to_tmp, which uses Porcelain to execute the git clone shell command.

And that's it. Our server module's call to ElixirLinter.RepoFetcher.fetch_repo will clone the repo to tmp/ and return the path to the cloned repo: tmp/some_great_elixir_repo. This filepath gets piped into the server module's call to ElixirLinter.Linter.lint. Let's build that our now.

Linting the Repo with Credo

Our Linter module will rely on Credo to check the repo for code quality. First things first, let's include Credo in our application dependencies and run mix deps.get.

defmodule ElixirLinter.Mixfile do

use Mix.Project

def project do

[app: :elixir_linter,

version: "0.1.0",

elixir: "~> 1.3",

build_embedded: Mix.env == :prod,

start_permanent: Mix.env == :prod,

deps: deps()]

end

def application do

[applications: [:logger, :porcelain, :credo]]

end

defp deps do

[

{:credo, "~> 0.5", only: [:dev, :test]},

{:porcelain, "~> 2.0"}

]

end

Our Linter module will wrap up a call to Credo's Check.Runner module:

Credo.Check.Runner.run(parsed_source_files, config)

So, we'll need to pass in a list of the Elixir files in a the given project, parsed by Credo.SourceFile.parse, which breaks them down into their constituent lines, and the Credo config map that is the result of running the following Credo code with filepath to a project that includes a valid Credo config file:

Credo.Config.read_or_default(filepath, nil, true)

This call to Credo.Config.read_or_default takes in an argument of a path to a directory. If the directory contains a config/.credo.exs file, Credo will read that file. If not, it will look for a config/.credo.exs file in the current project. This is perfect for us because it allows us to include a default config file in our own application, while respecting the Credo configuration that the individual projects we clone down may include.

Let's take this one step at a time. First, we'll collect a list of all the Elixir files in the given directory.

Listing All the Project Files

We'll build a function, list_all, that takes in the path to the project and iterates over its directories, recursively listing all the files and collecting them in a new list, provided they end in .ex or .exs.

# lib/elixir_linter/linter.ex

defmodule ElixirLinter.Linter do

def lint(filepath) do

source_files = list_all(filepath)

|> Enum.map(&Credo.SourceFile.parse(File.read!(&1), &1))

end

def list_all(filepath) do

_list_all(filepath)

end

defp _list_all(filepath) do

cond do

String.contains?(filepath, ".git") -> []

true -> expand(File.ls(filepath), filepath)

end

end

defp expand({:ok, files}, path) do

files

|> Enum.flat_map(&_list_all("#{path}/#{&1}"))

end

defp expand({:error, _}, path) do

collect_file({is_elixir_file?(path), path})

end

defp collect_file({true, path}), do: [path]

defp collect_file({false, path}), do: []

defp is_elixir_file?(path) do

String.contains?(path, ".ex") || String.contains?(path, ".exs")

end

end

We won't spend a lot of time going over our list_all code here. If you want to dig in deeper, check out my post on Building a Recursive Function To List All Files in a Directory

Next up, we'll construct the Credo config map.

Credo Config

# lib/elixir_linter/linter.ex

defmodule ElixirLinter.Linter do

def lint(filepath) do

source_files = list_all(filepath)

|> Enum.map(&Credo.SourceFile.parse(File.read!(&1), &1))

config = Credo.Config.read_or_default(filepath, nil, true)

|> Map.merge(%{skipped_checks: [], color: true})

end

Running Credo Checks

Now we have the parsed source files and the config with which to run Credo:

# lib/elixir_linter/linter.ex

defmodule ElixirLinter.Linter do

def lint(filepath) do

source_files = list_all(filepath)

|> Enum.map(&Credo.SourceFile.parse(File.read!(&1), &1))

config = Credo.Config.read_or_default(filepath, nil, true)

|> Map.merge(%{skipped_checks: [], color: true})

Credo.Check.Runner.run(source_files, config)

end

This returns the results of the check in the following format:

{results, config}

In which results is a list of maps, each map representing a single checked file with keys like :filename and :issues . The :issues key points to another list of maps, each map representing a found issue and including its line number of origin, name and description.

This tuple will get passed by our server module to our Cli module for output to the terminal via ElixirLinter.Cli.print_to_command_line({results, config})

Printing The Results

Later on, our Cli module will grow to take input from the command line. For now, we'll focus on the print_to_command_line function which takes in an argument of the result tuple from our Linter.lint function call.

# lib/elixir_linter/cli.ex

defmodule ElixirLinter.Cli do

def print_to_command_line({results, config}) do

output = Credo.CLI.Output.IssuesByScope

output.print_before_info(results, config)

output.print_after_info(results, config, 0, 0)

end

end

Our function is simple, it really just wraps up some existing Credo CLI code.

Removing the Cloned Repo

We're almost done! We just need to build the the RepoFetcher.clean_up function which will remove the cloned repo after Credo is done checking it.

# lib/elixir_linter/repo_fetcher.ex

...

def clean_up(repo) do

IO.puts "Removing repo #{repo}......"

repo

|> get_repo_name

|> delete_repo_if_cloned

end

def delete_repo_if_cloned(repo_name) do

File.ls!(@dir)

|> Enum.member?(repo_name)

|> remove_repo(repo_name)

end

def remove_repo(true, repo_name), do: File.rm_rf("#{@dir}/#{repo_name}")

def remove_repo(false, repo_name), do: nil

Here, we:

- List the files in the

tmp/directoy. - Pipe the results of that action into the

remove_repofunction, which uses pattern matching to either remove the repo if it is present, or do nothing if it is not.

And that's it!

Conclusion

In my next post, we'll turn our application into an executable with the help of Escripts. In the meantime, let's sum up what we've covered here.

We:

- Built an OTP application that runs a supervisor tree.

- Used that tree to store repository name data and run a server that fetches that data and uses it to clone down repositories and check them for code quality.

- Used that tree to run and supervise tasks.

- Integrated a third-party application, Porcelain, and leveraged it to clone down GitHub repos.

- Integrated a third-party application, Credo, and leveraged it to lint and output code quality issues to the terminal.

One last disclaimer--I'm still very new to Elixir, and I'm sure there are improvements that can be made to the pattern laid out here. Feel free to share pointers or questions in the comments. Thanks and happy coding!